Big O Notation Explained Quickly

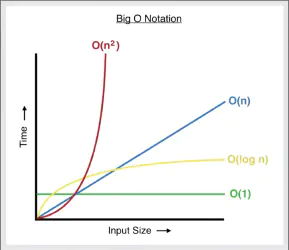

Big O Notation is a system that measures and compares the performance of code. It introduces specific vocabulary to relay information about how well an algorithm or piece of code is optimized.

This means we need a general language to say how fast a piece of code processes data and how efficiently it allocates and uses memory for its operations. Here we go through what this exactly means.